Start Google Cloud instance VM from a shared image from another Project / Organization

If you have multiple projects or organization and you want to clone a machine that is already configured, you can create an image of the instance of the machine.

But, you will have to share the access to the external project and organization.

Here is how to do it :

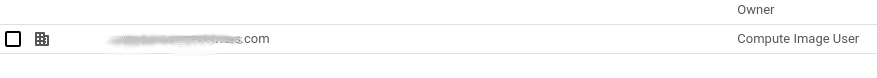

Share the image to the organization in the Images and in IAM

In both IAM and Image (VM) you will have to share the access Compute Image User

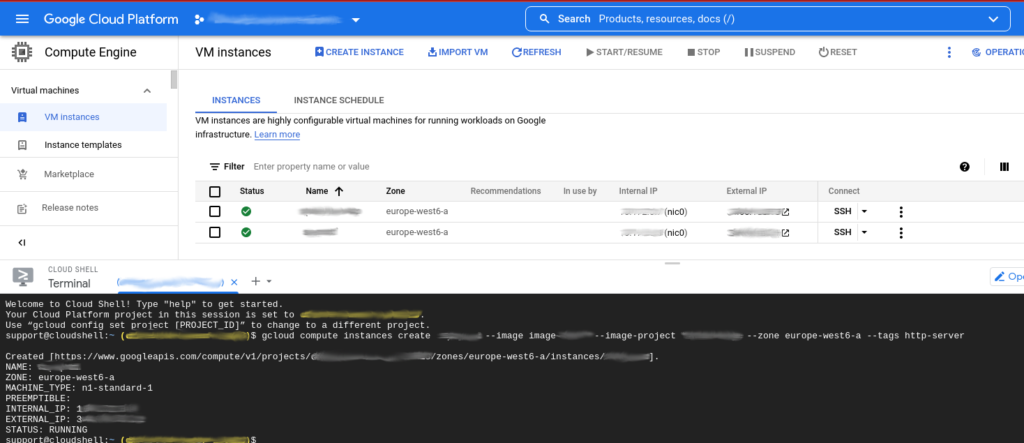

Start the VM using the shared image from another project / organization

gcloud compute instances create INSTANCE-NAME --image SHARED-IMAGE-NAME --image-project PROJECT-NAME --zone ZONE --tags http-server

- INSTANCE-NAME : Name of the VM instance you are creating (that will appear in the VM list)

- SHARED-IMAGE-NAME : The name of the image you shared to the organization

- PROJECT-NAME : The name if the project where is the image you shared to the organization

- ZONE : The geographical zone of the VM

Now the machine is started from an image from another organization and project !